- Startupizer 2 3 8 – Advanced Login Handlers

- Startupizer 2 3 8 – Advanced Login Handler

- Startupizer 2 3 8 – Advanced Login Handler Login

- Startupizer 2 3 8 – Advanced Login Handlers Card

- Startupizer 2 3 8 – Advanced Login Handler Access

Prepare for your ServSafe exams with our free ServSafe practice tests. The ServSafe Food Safety Training Program is developed and run by the National Restaurant Association to help train the food service industry on all aspects of food safety.

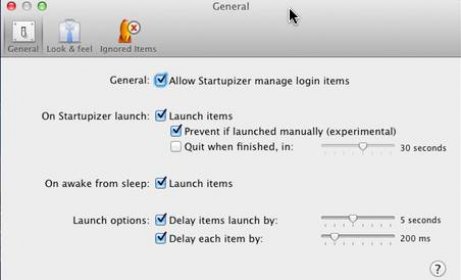

Startupizer is an advanced, yet simple-to-use login-items handler. It greatly enchances login items from your account settings in OS X System Preferences. You can choose among several criteria which determine whether and when a specific item should be started. Different criteria can be freely combined for each item in the login list. Startupizer is an advanced, yet simple-to-use login-items handler. It greatly enchances login items from your account settings in OS X System Preferences. You can choose among several criteria which determine whether and when a specific item should be started. Different criteria can be freely combined for each item in the login list. Advanced Distributor Products. Air Handler Comparison Guide. Limited Warranty Product manuf. After 8/1/17 Product Guide. Startupizer 2.3.11 – Advanced login handler. K'ed Startupizer Utilities. Startupizer is an advanced, yet simple-to-use login-items handler. It greatly enchances login items from your account settings in OS X. System Preferences. You can choose among several criteria which determine whether and when a specific item should be started.

The program includes the following training/certification courses: ServSafe Food Handler, ServSafe Manager, ServSafe Alcohol, and ServSafe Allergens.

Our free ServSafe practice tests (2021 Update) are listed below. View the 2021 ServSafe test questions and answers! Our free ServSafe sample tests provide you with an opportunity to assess how well you are prepared for the actual ServSafe test and then concentrate on the areas you need work on.

Summary: Use our free ServSafe practice tests to pass your exam.

ServSafe Practice Tests from Test-Guide.com

ServSafe Food Handler Practice Tests

ServSafe Manager Practice Tests

Test-Guide.com's sample ServSafe questions are an excellent way to study for your upcoming ServSafe exams. Our sample tests require no registration (or payment!). The questions are categorized based on the ServSafe test outline and are immediately scored at the end of the quiz.

Once you are finished with the quiz, you will be presented with a score report which includes a complete rationale (explanation) for every question you got wrong. We will be adding more sample test questions in the near future, so please come back often. If you like these ServSafe practice questions, please make sure to share this resource with your friends and colleagues.

ServSafe Study Guides and Resources

In preparing for your ServSafe certification exam, you may find these resources helpful:

| Resource | Notes | Provider |

| ServSafe Getting Started Guide | Check out this guide on how to get started. Information for all ServSafe programs | ServSafe |

| ServSafe FAQS | Complete list of frequently asked questions. | ServSafe |

| ServSafe Food Handler Flashcards | If the practice tests above aren't enought, check out these flashcards for additional help. 120+ terms. | Quizlet |

ServSafe Practice Test Benefits

There are many benefits of preparing for your ServSafe exam with practice tests. Studying for your ServSafe test using sample questions is one of the most effective study practices you can use. The advantages of using sample ServSafe tests include:

- Understanding the Test Format - Every standardized test has its own unique format. As you take practice ServSafe tests you will become comfortable with the format of the actual ServSafe test. Once the test day arrives you will have no surprises!

- Concentrating Your Study - As you take more and more sample tests you begin to get a feel for the topics that you know well and the areas that you are weak on. Many students waste a lot of valuable study time by reviewing material that they are good at (often because it is easier or makes them feel better). The most effective way to study is to concentrate on the areas that you need help on

- Increasing Your Speed - Some of the ServSafe exams are timed. Although most students who take the ServSafe feel that there is sufficient time, taking the ServSafe practice tests with self-imposed timers help you budget your time effectively.

ServSafe Exam Overview

ServSafe Food Handler Certification Exam

The ServSafe Food Handler program is designed to teach food safety to non-management food service employees. The training covers all areas of food safety, including: basic food safety, personal hygiene, cross-contamination and allergens, time and temperature, and cleaning and sanitation. Some food service employees may be asked to take an optional job specific section as directed by their manager. The course takes approximately 60 to 90 munutes.

The ServSafe Food Handler Exam is an untimed 40 question test. To receive a ServSafe Food Handler certificate, you must score better than 75% (i.e., answer more than 30 questions correctly).

ServSafe Manager Certification

The ServSafe Food Safety Program for Managers is designed to provide food safety training to food service managers. Upon completion of the course, and passing of an exam, students will receive a ServSafe Food Protection Manager Certification, which is accredited by the ANSI-Conference for Food Protection (CFP). The training incorporates the latest FDA Food Code as well as essential food safety best practices.

The ServSafe Manager training covers the following concepts:

- Food Safety Regulations

- The Importance of Food Safety

- Time and Temperature Control

- Good Personal Hygiene

- Safe Food Preparation

- Preventing Cross-Contamination

- Cleaning and Sanitizing

- HACCP (Hazard Analysis and Critical Control Points)

- Receiving and Storing Food

- Methods of Thawing, Cooking, Cooling and Reheating Food

The ServSafe Manager exam is given in a proctored environment and has 90 multiple choice questions. You are required to score 75% or better on the exam to receive certification. There is a 2 hour time limit on the exam.

ServSafe Alcohol Training and Certification

The ServSafe alcohol training program is intended to help alcohol service providers (e.g., bartenders, servers, hosts, etc.) to safely handle situations they may encounter when serving alcohol. The ServSafe Alcohol program provides training on the following topics: alcohol laws and responsibilities, intoxication levels, age identification, and dealing with difficult situations.

There are two exams available: Primary ServSafe Alcohol and Advanced ServSafe Alcohol. The Primary ServSafe Alcohol exam covers the basic aspects of alcohol service. The Primary exam is an untimed 40 question exam that requires a 75% passing score. The Advanced Alcohol exam covers more advanced topics across a broader area of alcohol service. The Advanced exam is an untimed 60 question exam that requires a 80% passing score.

ServSafe Allergens

The ServSafe Allergens certification is a recent addition to the ServSafe family and is currently utilized in Massachusetts and Rhode Island.

These ServSafe Practice Exams are intended to help you receive your ServSafe certificate. If you know of any other study resources, please let us know in the comments below.

ServSafe FAQS

What is the best way to prepare for the ServSafe exam?

What are the different ServSafe exams?

Is the ServSafe exam hard?

Last Updated: 01/5/2021

Note

scrapy.log has been deprecated alongside its functions in favor ofexplicit calls to the Python standard logging. Keep reading to learn moreabout the new logging system.

Scrapy uses logging for event logging. We'llprovide some simple examples to get you started, but for more advanceduse-cases it's strongly suggested to read thoroughly its documentation.

Logging works out of the box, and can be configured to some extent with theScrapy settings listed in .

Scrapy calls scrapy.utils.log.configure_logging() to set some reasonabledefaults and handle those settings in whenrunning commands, so it's recommended to manually call it if you're runningScrapy from scripts as described in .

Log levels¶

Python's builtin logging defines 5 different levels to indicate the severity of agiven log message. Here are the standard ones, listed in decreasing order:

logging.CRITICAL- for critical errors (highest severity)logging.ERROR- for regular errorslogging.WARNING- for warning messageslogging.INFO- for informational messageslogging.DEBUG- for debugging messages (lowest severity)

How to log messages¶

Here's a quick example of how to log a message using the logging.WARNINGlevel:

There are shortcuts for issuing log messages on any of the standard 5 levels,and there's also a general logging.log method which takes a given level asargument. If needed, the last example could be rewritten as:

On top of that, you can create different 'loggers' to encapsulate messages. (Forexample, a common practice is to create different loggers for every module).These loggers can be configured independently, and they allow hierarchicalconstructions.

The previous examples use the root logger behind the scenes, which is a top levellogger where all messages are propagated to (unless otherwise specified). Usinglogging helpers is merely a shortcut for getting the root loggerexplicitly, so this is also an equivalent of the last snippets:

You can use a different logger just by getting its name with thelogging.getLogger function:

Finally, you can ensure having a custom logger for any module you're working onby using the __name__ variable, which is populated with current module'spath:

See also

Basic Logging Tutorial

Further documentation on loggers

Logging from Spiders¶

Startupizer 2 3 8 – Advanced Login Handlers

Scrapy provides a logger within each Spiderinstance, which can be accessed and used like this:

That logger is created using the Spider's name, but you can use any customPython logger you want. For example:

Logging configuration¶

Loggers on their own don't manage how messages sent through them are displayed.For this task, different 'handlers' can be attached to any logger instance andthey will redirect those messages to appropriate destinations, such as thestandard output, files, emails, etc.

By default, Scrapy sets and configures a handler for the root logger, based onthe settings below.

Logging settings¶

These settings can be used to configure the logging:

The first couple of settings define a destination for log messages. If is set, messages sent through the root logger will beredirected to a file named with encoding. If unset and is True, logmessages will be displayed on the standard error. Lastly, if is False, there won't be any visible log output.

determines the minimum level of severity to display, thosemessages with lower severity will be filtered out. It ranges through thepossible levels listed in .

and specify formatting stringsused as layouts for all messages. Those strings can contain any placeholderslisted in logging's logrecord attributes docs anddatetime's strftime and strptime directivesrespectively.

If is set, then the logs will not display the Scrapycomponent that prints the log. It is unset by default, hence logs contain theScrapy component responsible for that log output.

Command-line options¶

There are command-line arguments, available for all commands, that you can useto override some of the Scrapy settings regarding logging.

--logfileFILEOverrides

--loglevel/-LLEVELOverrides

--nologSets to

False

See also

logging.handlersFurther documentation on available handlers

Custom Log Formats¶

A custom log format can be set for different actions by extendingLogFormatter class and making point to your new class.

scrapy.logformatter.LogFormatter[source]¶Class for generating log messages for different actions.

All methods must return a dictionary listing the parameters level, msgand args which are going to be used for constructing the log message whencalling logging.log.

Dictionary keys for the method outputs:

levelis the log level for that action, you can use those from thepython logging library :logging.DEBUG,logging.INFO,logging.WARNING,logging.ERRORandlogging.CRITICAL.msgshould be a string that can contain different formatting placeholders.This string, formatted with the providedargs, is going to be the long messagefor that action.argsshould be a tuple or dict with the formatting placeholders formsg.The final log message is computed asmsg%args.

Users can define their own LogFormatter class if they want to customize howeach action is logged or if they want to omit it entirely. In order to omitlogging an action the method must return None.

Here is an example on how to create a custom log formatter to lower the severity level ofthe log message when an item is dropped from the pipeline:

crawled(request, response, spider)[source]¶Logs a message when the crawler finds a webpage.

download_error(failure, request, spider, errmsg=None)[source]¶Logs a download error message from a spider (typically coming fromthe engine).

New in version 2.0.

dropped(item, exception, response, spider)[source]¶

See also

Basic Logging Tutorial

Further documentation on loggers

Logging from Spiders¶

Startupizer 2 3 8 – Advanced Login Handlers

Scrapy provides a logger within each Spiderinstance, which can be accessed and used like this:

That logger is created using the Spider's name, but you can use any customPython logger you want. For example:

Logging configuration¶

Loggers on their own don't manage how messages sent through them are displayed.For this task, different 'handlers' can be attached to any logger instance andthey will redirect those messages to appropriate destinations, such as thestandard output, files, emails, etc.

By default, Scrapy sets and configures a handler for the root logger, based onthe settings below.

Logging settings¶

These settings can be used to configure the logging:

The first couple of settings define a destination for log messages. If is set, messages sent through the root logger will beredirected to a file named with encoding. If unset and is True, logmessages will be displayed on the standard error. Lastly, if is False, there won't be any visible log output.

determines the minimum level of severity to display, thosemessages with lower severity will be filtered out. It ranges through thepossible levels listed in .

and specify formatting stringsused as layouts for all messages. Those strings can contain any placeholderslisted in logging's logrecord attributes docs anddatetime's strftime and strptime directivesrespectively.

If is set, then the logs will not display the Scrapycomponent that prints the log. It is unset by default, hence logs contain theScrapy component responsible for that log output.

Command-line options¶

There are command-line arguments, available for all commands, that you can useto override some of the Scrapy settings regarding logging.

--logfileFILEOverrides

--loglevel/-LLEVELOverrides

--nologSets to

False

See also

logging.handlersFurther documentation on available handlers

Custom Log Formats¶

A custom log format can be set for different actions by extendingLogFormatter class and making point to your new class.

scrapy.logformatter.LogFormatter[source]¶Class for generating log messages for different actions.

All methods must return a dictionary listing the parameters level, msgand args which are going to be used for constructing the log message whencalling logging.log.

Dictionary keys for the method outputs:

levelis the log level for that action, you can use those from thepython logging library :logging.DEBUG,logging.INFO,logging.WARNING,logging.ERRORandlogging.CRITICAL.msgshould be a string that can contain different formatting placeholders.This string, formatted with the providedargs, is going to be the long messagefor that action.argsshould be a tuple or dict with the formatting placeholders formsg.The final log message is computed asmsg%args.

Users can define their own LogFormatter class if they want to customize howeach action is logged or if they want to omit it entirely. In order to omitlogging an action the method must return None.

Here is an example on how to create a custom log formatter to lower the severity level ofthe log message when an item is dropped from the pipeline:

crawled(request, response, spider)[source]¶Logs a message when the crawler finds a webpage.

download_error(failure, request, spider, errmsg=None)[source]¶Logs a download error message from a spider (typically coming fromthe engine).

New in version 2.0.

dropped(item, exception, response, spider)[source]¶Logs a message when an item is dropped while it is passing through the item pipeline.

item_error(item, exception, response, spider)[source]¶Logs a message when an item causes an error while it is passingthrough the item pipeline.

scraped(item, response, spider)[source]¶Logs a message when an item is scraped by a spider.

spider_error(failure, request, response, spider)[source]¶Logs an error message from a spider.

New in version 2.0.

Advanced customization¶

Because Scrapy uses stdlib logging module, you can customize logging usingall features of stdlib logging.

For example, let's say you're scraping a website which returns manyHTTP 404 and 500 responses, and you want to hide all messages like this:

The first thing to note is a logger name - it is in brackets:[scrapy.spidermiddlewares.httperror]. If you get just [scrapy] then is likely set to True; set it to False and re-runthe crawl.

Next, we can see that the message has INFO level. To hide itwe should set logging level for scrapy.spidermiddlewares.httperrorhigher than INFO; next level after INFO is WARNING. It could be donee.g. in the spider's __init__ method:

Startupizer 2 3 8 – Advanced Login Handler

If you run this spider again then INFO messages fromscrapy.spidermiddlewares.httperror logger will be gone.

scrapy.utils.log module¶

scrapy.utils.log.configure_logging(settings=None, install_root_handler=True)[source]¶Initialize logging defaults for Scrapy.

settings (dict,

Settingsobject orNone) – settings used to create and configure a handler for theroot logger (default: None).install_root_handler (bool) – whether to install root logging handler(default: True)

This function does:

Route warnings and twisted logging through Python standard logging

Assign DEBUG and ERROR level to Scrapy and Twisted loggers respectively

Route stdout to log if LOG_STDOUT setting is True

When install_root_handler is True (default), this function alsocreates a handler for the root logger according to given settings(see ). You can override default optionsusing settings argument. When settings is empty or None, defaultsare used.

configure_logging is automatically called when using Scrapy commandsor CrawlerProcess, but needs to be called explicitlywhen running custom scripts using CrawlerRunner.In that case, its usage is not required but it's recommended.

Another option when running custom scripts is to manually configure the logging.To do this you can use logging.basicConfig() to set a basic root handler.

Startupizer 2 3 8 – Advanced Login Handler Login

Note that CrawlerProcess automatically calls configure_logging,so it is recommended to only use logging.basicConfig() together withCrawlerRunner.

Startupizer 2 3 8 – Advanced Login Handlers Card

This is an example on how to redirect INFO or higher messages to a file:

Startupizer 2 3 8 – Advanced Login Handler Access

Refer to for more details about using Scrapy thisway.